The BMI lab bridges research in Machine Learning and Sequence Analysis methodology research and its application to biomedical problems. We collaborate with biologists and clinicians to develop real-world solutions.

We work on research questions and foundational challenges in storing, analysing, and searching extensive heterogeneous and temporal data, especially in the biomedical domain. Our lab members address technical and non-technical research questions in collaboration with biologists and clinicians. At the research group’s core is an active knowledge exchange in both directions between the methods and the application-driven researchers.

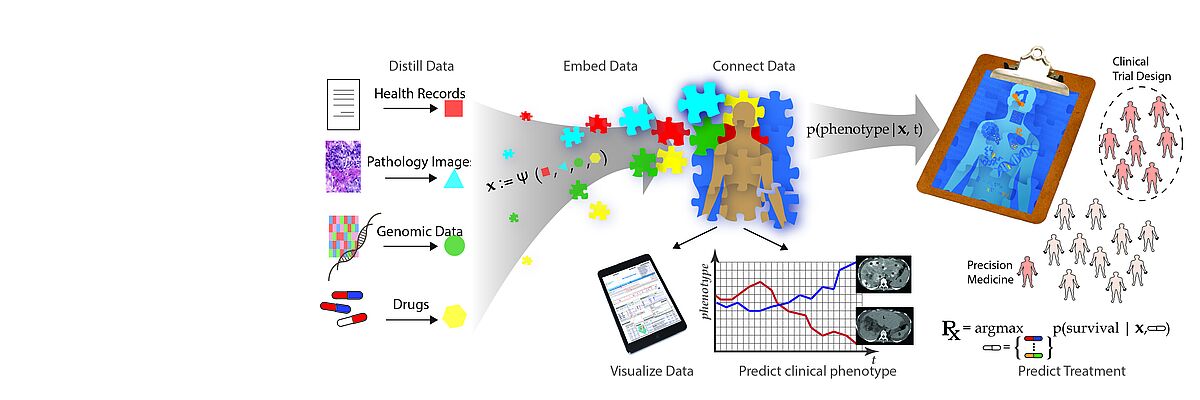

The emergence of data-driven medicine leverages data and algorithms to shape how we diagnose and treat patients. Machine Learning approaches allow us to capitalise on the vast amount of data produced in clinical settings to generate novel biomedical insights and build more precise predictive models of disease outcomes and treatment efficacy.

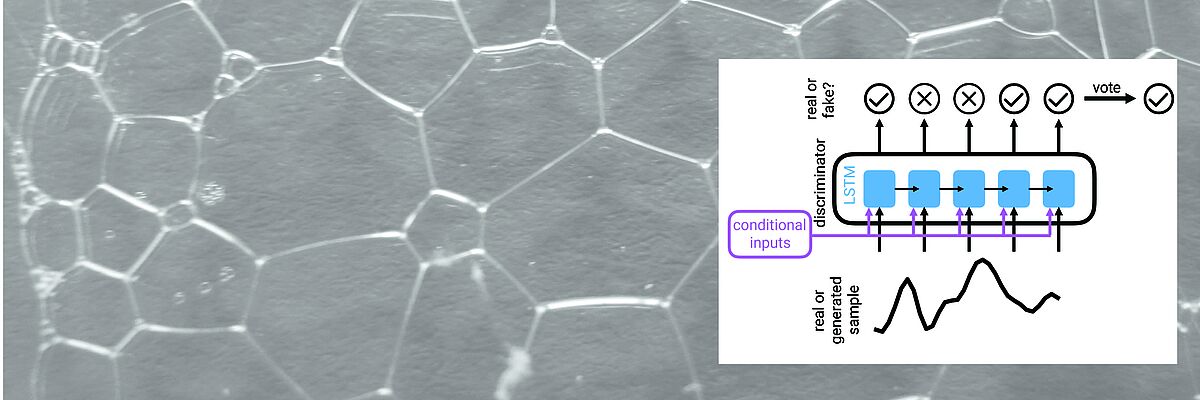

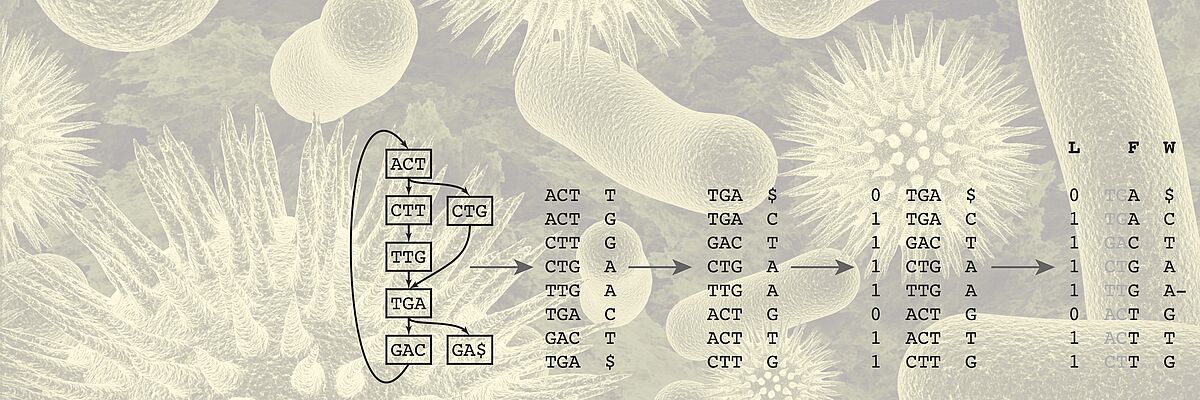

We work towards this transformation mainly but not exclusively in two key areas. One key application area is the analysis of heterogeneous data of cancer patients. For Genomics, we develop algorithms for storing, compressing, and searching extensive genomics datasets. Another key area is the development of time series models of patient health states and early warning systems for intensive care units.

Publications

Abstract This study advances Early Event Prediction (EEP) in healthcare through Dynamic Survival Analysis (DSA), offering a novel approach by integrating risk localization into alarm policies to enhance clinical event metrics. By adapting and evaluating DSA models against traditional EEP benchmarks, our research demonstrates their ability to match EEP models on a time-step level and significantly improve event-level metrics through a new alarm prioritization scheme (up to 11% AuPRC difference). This approach represents a significant step forward in predictive healthcare, providing a more nuanced and actionable framework for early event prediction and management.

Authors Hugo Yèche, Manuel Burger, Dinara Veshchezerova, Gunnar Rätsch

Submitted CHIL 2024

Abstract A prominent challenge of offline reinforcement learning (RL) is the issue of hidden confounding: unobserved variables may influence both the actions taken by the agent and the observed outcomes. Hidden confounding can compromise the validity of any causal conclusion drawn from data and presents a major obstacle to effective offline RL. In the present paper, we tackle the problem of hidden confounding in the nonidentifiable setting. We propose a definition of uncertainty due to hidden confounding bias, termed delphic uncertainty, which uses variation over world models compatible with the observations, and differentiate it from the well-known epistemic and aleatoric uncertainties. We derive a practical method for estimating the three types of uncertainties, and construct a pessimistic offline RL algorithm to account for them. Our method does not assume identifiability of the unobserved confounders, and attempts to reduce the amount of confounding bias. We demonstrate through extensive experiments and ablations the efficacy of our approach on a sepsis management benchmark, as well as on electronic health records. Our results suggest that nonidentifiable hidden confounding bias can be mitigated to improve offline RL solutions in practice.

Authors Alizée Pace, Hugo Yèche, Bernhard Schölkopf, Gunnar Ratsch, Guy Tennenholtz

Submitted ICLR 2024

Abstract In this paper, we explore the structure of the penultimate Gram matrix in deep neural networks, which contains the pairwise inner products of outputs corresponding to a batch of inputs. In several architectures it has been observed that this Gram matrix becomes degenerate with depth at initialization, which dramatically slows training. Normalization layers, such as batch or layer normalization, play a pivotal role in preventing the rank collapse issue. Despite promising advances, the existing theoretical results (i) do not extend to layer normalization, which is widely used in transformers, (ii) can not characterize the bias of normalization quantitatively at finite depth. To bridge this gap, we provide a proof that layer normalization, in conjunction with activation layers, biases the Gram matrix of a multilayer perceptron towards isometry at an exponential rate with depth at initialization. We quantify this rate using the Hermite expansion of the activation function, highlighting the importance of higher order (≥2) Hermite coefficients in the bias towards isometry.

Authors Amir Joudaki, Hadi Daneshmand, Francis Bach

Submitted NeurIPS 2023 (poster)

Abstract Recent advances in deep learning architectures for sequence modeling have not fully transferred to tasks handling time-series from electronic health records. In particular, in problems related to the Intensive Care Unit (ICU), the state-of-the-art remains to tackle sequence classification in a tabular manner with tree-based methods. Recent findings in deep learning for tabular data are now surpassing these classical methods by better handling the severe heterogeneity of data input features. Given the similar level of feature heterogeneity exhibited by ICU time-series and motivated by these findings, we explore these novel methods' impact on clinical sequence modeling tasks. By jointly using such advances in deep learning for tabular data, our primary objective is to underscore the importance of step-wise embeddings in time-series modeling, which remain unexplored in machine learning methods for clinical data. On a variety of clinically relevant tasks from two large-scale ICU datasets, MIMIC-III and HiRID, our work provides an exhaustive analysis of state-of-the-art methods for tabular time-series as time-step embedding models, showing overall performance improvement. In particular, we evidence the importance of feature grouping in clinical time-series, with significant performance gains when considering features within predefined semantic groups in the step-wise embedding module.

Authors Rita Kuznetsova, Alizée Pace, Manuel Burger, Hugo Yèche, Gunnar Rätsch

Submitted ML4H 2023 (PMLR)

Abstract Clinicians are increasingly looking towards machine learning to gain insights about patient evolutions. We propose a novel approach named Multi-Modal UMLS Graph Learning (MMUGL) for learning meaningful representations of medical concepts using graph neural networks over knowledge graphs based on the unified medical language system. These representations are aggregated to represent entire patient visits and then fed into a sequence model to perform predictions at the granularity of multiple hospital visits of a patient. We improve performance by incorporating prior medical knowledge and considering multiple modalities. We compare our method to existing architectures proposed to learn representations at different granularities on the MIMIC-III dataset and show that our approach outperforms these methods. The results demonstrate the significance of multi-modal medical concept representations based on prior medical knowledge.

Authors Manuel Burger, Gunnar Rätsch, Rita Kuznetsova

Submitted ML4H 2023 (PMLR)